Reality is no longer something you see. It’s something you decode.

What you’re looking at might not be real.

What you remember might have been engineered.

What you trust might already be compromised.

The line between truth and illusion has vanished. Most haven’t noticed.

Find out why 1M+ professionals read Superhuman AI daily.

In 2 years you will be working for AI

Or an AI will be working for you

Here's how you can future-proof yourself:

Join the Superhuman AI newsletter – read by 1M+ people at top companies

Master AI tools, tutorials, and news in just 3 minutes a day

Become 10X more productive using AI

Join 1,000,000+ pros at companies like Google, Meta, and Amazon that are using AI to get ahead.

From Early Image Recognition to Synthetic Realities

In 2009, I launched a product enabling users to upload images of books and DVDs to compare prices across platforms like Amazon and Walmart.

It used cloud-based image recognition—cutting-edge for its time. Today, generative AI creates images and video indistinguishable from reality. The leap from recognizing reality to fabricating it has redefined what's possible—and what’s at risk.

When I started in computer vision we were teaching machines to recognize reality. Today, people are teaching machines to fabricate it.

Deepfake tools are everywhere. They’re cheap, fast and good enough to fool most people.

What you see is no longer proof of what’s real.

Critical thinking isn’t optional, it’s survival.

Understanding GANs: The Backbone of Generative AI

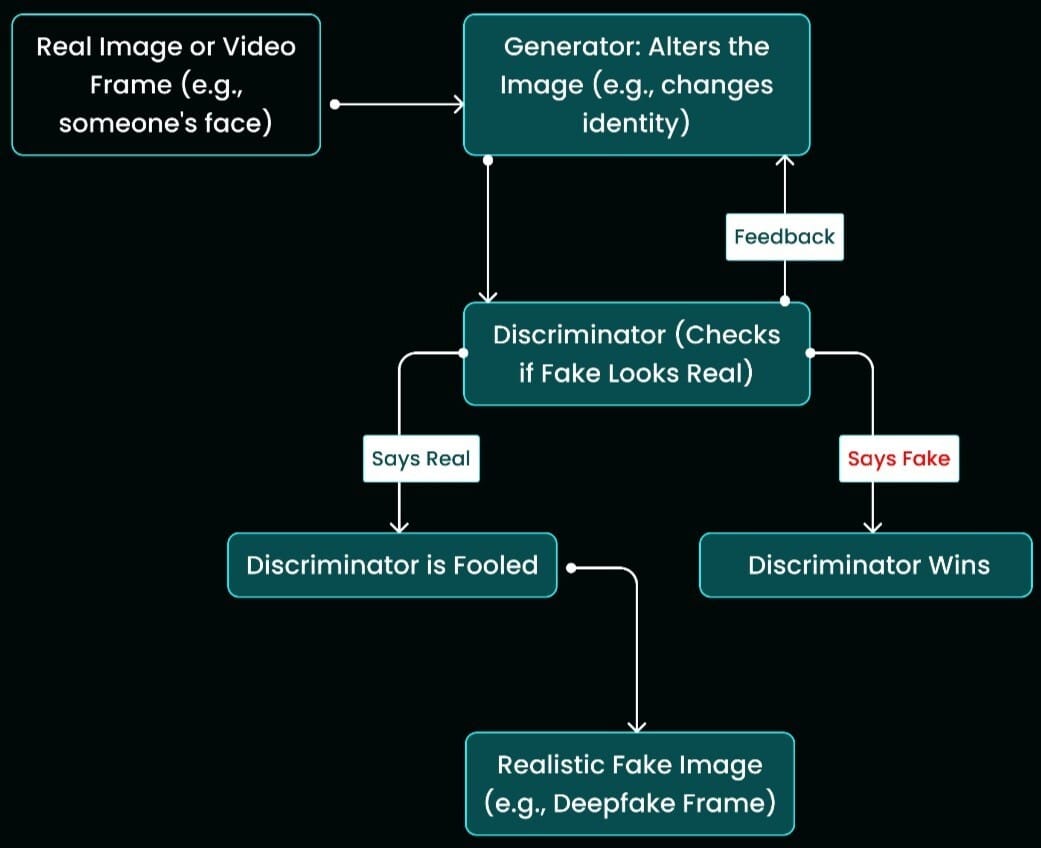

In 2014, Ian Goodfellow introduced Generative Adversarial Networks (GANs), a framework where two neural networks—the generator and the discriminator—compete in a zero-sum game.

The generator creates images, while the discriminator evaluates them against real data. Through this adversarial process, the generator learns to produce increasingly realistic images. A significant milestone came in 2016 with the paper Invertible Conditional GANs for Image Editing by Perarnau et al. This work introduced methods to map real images into a latent space, enabling precise edits based on attributes like age or expression.

This capability laid the groundwork for sophisticated image manipulation techniques.

🗓️ Noteworthy Upcoming Events

Conference on Computer Vision and Pattern Recognition 2025 | ACM Multimedia 2025 Grand Challenge | AICIP 2025 Workshop on Generative AI for Forensics and Security Applications |

|---|---|---|

June 11-15, 2025 | October 27–31, 2025 | September 14–17, 2025 |

Nashville, TN | Dublin, Ireland | Anchorage, Alaska, USA |

Register here. | Register here. | Register here. |

The IEEE / CVF Computer Vision and Pattern Recognition Conference (CVPR) is the premier annual computer vision event comprising the main conference and several co-located workshops and short courses. With its high quality and low cost, it provides an exceptional value for students, academics and industry researchers. | This ACM Multimedia 2025 challenge builds upon the successful 2024 Grand Challenge hosted at ACM Multimedia 2024, which attracted participation from 194 teams. The challenge focuses on the tasks of detection and localization of deepfakes. The later deals with identifying the timestamps where a video is manipulated. This year the challenge is based on AV-Deepfakes-1M+ database. | This workshop promotes research into deepfake detection, adversarial robustness, and secure data synthesis for criminal investigations. |

The Rise of Hyper-Realistic Deepfakes

By 2018, the potential of GANs reignited interest in computer vision, signaling a shift toward generative AI.

Today, the quality of deepfake content has reached a level where distinguishing real from fake is increasingly challenging. Modern tools can generate photorealistic images and videos from simple text prompts. For instance, Google's Imagen 4, released in May 2025, delivers high-fidelity images with enhanced text rendering capabilities . Similarly, Ideogram 3.0 excels at producing images with legible text, a feature previously difficult to achieve.

Open-source platforms like ComfyUI have also made it easier for developers to experiment with and deploy advanced generative models.

🕚 Balanced & Insightful

The Dual Challenge: Creation and Detection

As synthetic media becomes more widespread, the demand for reliable detection grows. Adversarial attacks are a real concern, especially in safety-critical systems like autonomous driving. A small, human-imperceptible change to a stop sign, for instance, can cause an AI to misread or ignore it—resulting in potentially dangerous outcomes.

To counter these risks, researchers are developing advanced detection systems that can identify and mitigate attempts to deceive AI. These tools are becoming essential wherever trust in visual data is non-negotiable.

At Sprinklenet, we’ve explored hybrid approaches to deepfake detection, combining patch-based feature extraction with classifier ensembles. This layered method improves the system’s ability to catch subtle artifacts that single-model approaches often miss.

Implications for Business Leaders

The advent of highly convincing synthetic media has profound implications across industries. Organizations must recognize that visual content can no longer be taken at face value. Implementing robust verification processes and staying informed about advancements in both generative and detection technologies is crucial.

As we navigate this new landscape, understanding the capabilities and limitations of AI-generated content will be essential in maintaining trust and integrity in digital communications.

📰 AI Trends & News

Industry Leaders Advocate for 'No Fakes Act' to Combat Deepfakes

On May 21, 2025, tech and music industry leaders, including YouTube executives and artists like Martina McBride, testified before the U.S. Senate Judiciary Committee in support of the bipartisan 'No Fakes Act.' This proposed legislation aims to protect individuals from unauthorized AI-generated deepfakes and voice clones, holding creators and platforms accountable. More here…

Deepfake Scams Become More Sophisticated and Costly

Deepfake scams are evolving rapidly, with criminals now able to create real-time, multilingual impersonations. In one notable case, a British engineering firm lost £20 million after scammers used deepfake video to impersonate company executives during a Zoom call, highlighting the growing cybersecurity risks associated with synthetic media. More here…

Research Reveals Vulnerabilities in Deepfake Detection Tools

A study by CSIRO has found that current deepfake detection systems struggle to identify manipulated content in real-world scenarios, correctly spotting fakes only about two-thirds of the time. This underscores the ongoing "cat and mouse" game between deepfake creators and detection technologies. More here…

🔧 Legacy Spotlight

Breathing New Life Into Legacy Image Systems

Many enterprises still rely on aging systems that were built around static image databases: document scanning, ID verification, medical imaging, or security footage archives. These systems weren’t designed with AI in mind, but that doesn’t mean they’re obsolete.

At Sprinklenet, we've helped clients augment legacy pipelines by embedding AI-driven layers: OCR upgrades, real-time anomaly detection, facial comparison, even synthetic data generation to improve model performance where labeled data is scarce.

One of the most impactful transformations comes when legacy image stores are connected to modern generative or classification models. Suddenly, an old archive becomes a living dataset—something that can train, test, or feed intelligent workflows.

Legacy systems don’t need to be replaced overnight. With the right architecture, they can become powerful foundations for the next generation of AI tools.

🗓️ Coming Next: Aspirations, Alignment and AI.

How to get leaders, developers and product teams on the same page.

Jamie Thompson: Sprinklenet

Right now, as you're considering how AI can truly transform your company, perhaps it's to streamline a key process or create a better customer experience.

The big question then becomes: how do you make that vision a practical and efficient reality?

At Sprinklenet, we're actively working with businesses like yours. We take the latest AI advancements and implement them to deliver concrete improvements you can see.

Our focus is on tangible outcomes, not just potential.

Not sure where to start with AI?

Let Jamie walk you through a personalized systems review—tailored to your existing infrastructure.

✅ Discover where AI can add real impact

✅ Avoid common scaling mistakes

✅ Get clarity 👇🏼

He’s already IPO’d once – this time’s different

Spencer Rascoff grew Zillow from seed to IPO. But everyday investors couldn’t join until then, missing early gains. So he did things differently with Pacaso. They’ve made $110M+ in gross profits disrupting a $1.3T market. And after reserving the Nasdaq ticker PCSO, you can join for $2.80/share until 5/29.

This is a paid advertisement for Pacaso’s Regulation A offering. Please read the offering circular at invest.pacaso.com. Reserving a ticker symbol is not a guarantee that the company will go public. Listing on the NASDAQ is subject to approvals. Under Regulation A+, a company has the ability to change its share price by up to 20%, without requalifying the offering with the SEC.