This Week's Focus ⤵️

Systems 🚀

Good morning esteemed readers and people of the Internet.

I hope you’ve fed your cats, your children, and your spouse, and had your coffee.

If you don’t have cats, kids, or a spouse—or don’t drink coffee—I hope you slept well.

This weekend edition focuses on systems: the connective tissue between humans, AI, and processes.

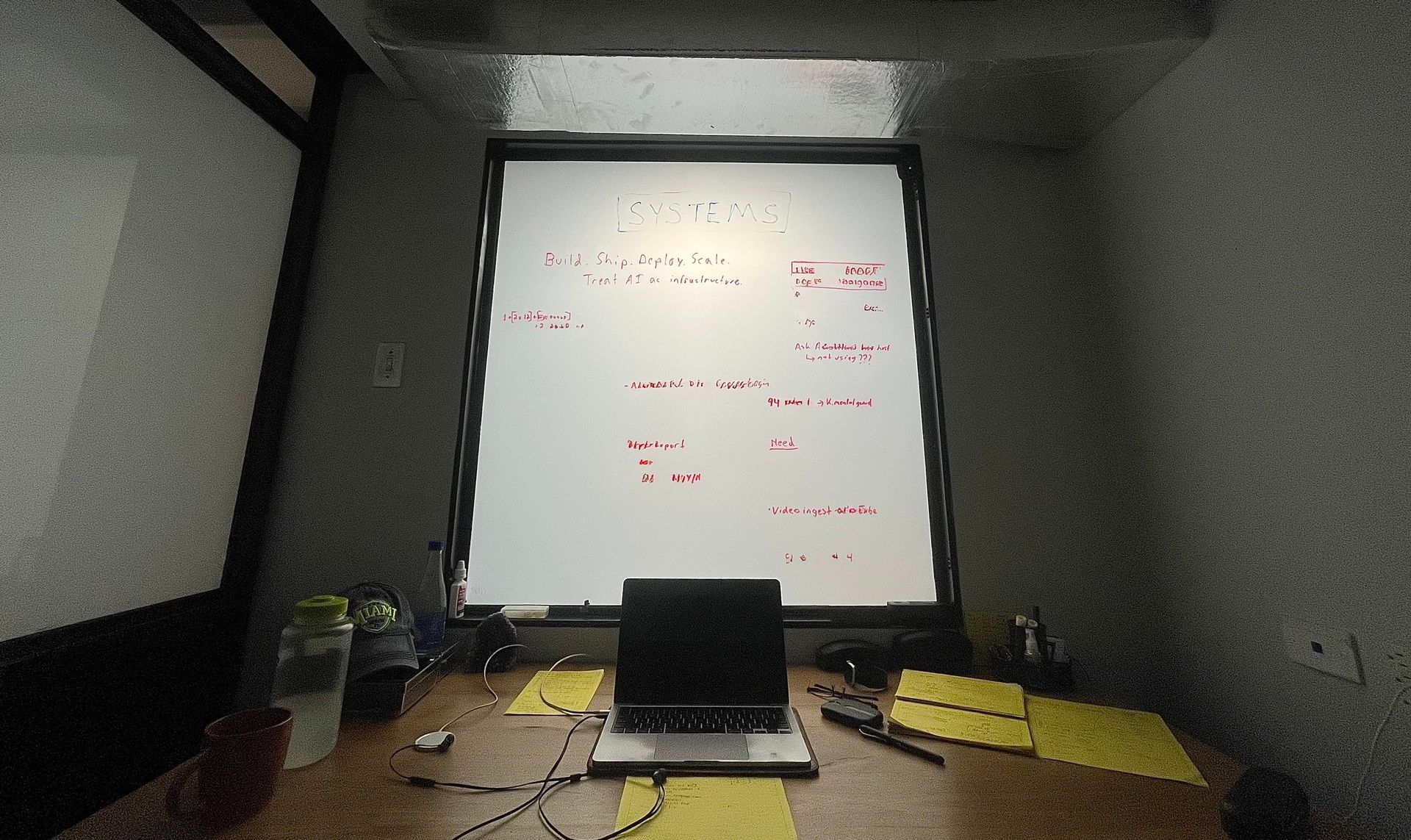

I keep the word “SYSTEMS” written above my desk as a daily reminder that our mission is to fuel the knowledge revolution by scaling expert humans through AI and integrated systems.

I love AI MVPs and the blend of R&D with business operations, but the future belongs to those who seamlessly connect human knowledge, AI, and processes into scalable systems within their enterprise.

And that scale will be bigger than anything we’ve seen before.

As 2026 approaches, I’m convinced that the most disruptive players — especially in non-tech industries — will be those who integrate AI into their operational fabric and train their top talent to master it.

This transition won’t reward hesitation. It will reward speed, discipline, and strategy.

If you’re a domain expert, your role isn’t being replaced — it’s being amplified. Scarcity creates value, and your expertise becomes a strategic multiplier.

The next frontier isn’t just AI adoption. It’s human enablement — training operators to thrive inside AI-driven systems. After years of building and deploying technology, I’m now focused on systematizing everything. Every enterprise should be doing the same.

Speed is the theme in enterprise AI right now. Run. Because if you don’t, someone else will.

Sponsor Spotlight 💡

Sprinklenet Knowledge Spaces

A single pane of AI configuration that unifies multi-model access, retrieval, governance, and role-specific chatbots for enterprise workflows.

Launch pilots in days. Scale to thousands of configurable gen-AI bots.

Serve your teams — and millions of customers — from one system of intelligence.

Diving Deeper 🤿

Capturing internal knowledge is the foundation for scaling your AI systems.

Start by helping your technology teams understand how work actually happens.

In tools like Knowledge Spaces, experts upload and organize data, while AI generates summaries — editable by human operators.

This “human-in-the-loop” structure is critical. Automation handles volume, but human review ensures accuracy, especially for sensitive data and documents. Once pipelines are tested, API-driven data flows can run autonomously.

Knowledge can also be shared externally in controlled, read-only modes. Admins decide what to expose — maintaining security while promoting collaboration.

Importantly, data is never merged into an LLM.

Large language models are engines for response reasoning, not repositories for your data.

You have many LLM options — OpenAI’s ChatGPT, xAI’s Grok, Google Gemini, DeepSeek, Qwen, and others. No single model fits all use cases.

That’s why configuration flexibility matters. Switching models should be seamless — preserving security, avoiding lock-in, and future-proofing your system.

Train your people early. Humans remain the conductors.

(See our previous edition on Scaling Knowledge for deeper insight.)

Customized front-ends then bring this intelligence to life — powering microsites, chatbots, apps, or IT dashboards — all drawing from one governed knowledge base.

At Sprinklenet, we’re exploring open-sourcing chatbot templates to let partners build faster. The creative potential is endless.

Core Principles in Practice ⚙️

Knowledge Capture and Governance: Ingest and tag enterprise data with AI. Apply rules for access controls to protect IP and ensure data security in internal or shared spaces.

Model Flexibility: Select and switch LLMs dynamically to avoid lock-in. Adapt to evolving technologies for precise, context-specific outputs in complex queries.

Human Training Integration: Involve staff early as AI conductors. Provide targeted education to optimize system use and decision-making.

Customizable Interfaces: Design back-end systems to feed multiple front-ends. Preserve brand control and user experience across apps and workflows.

Speed and Adaptability: Prioritize rapid deployment and iteration. Embed guardrails to maintain enterprise oversight amid fast-paced AI advancements.

The best way to predict the future is to create it.

Legacy Spotlight 🔧

Legacy IT systems often hold vast untapped knowledge. Integrating AI requires careful bridging.

Start by mapping existing data to modern tools like Knowledge Spaces. Ensure compatibility without disruptions. This preserves data integrity and applies access controls.

Human training bridges the gap. It empowers operators to leverage AI atop legacy infrastructures.

Ultimately, this hybrid approach scales expertise. It turns outdated systems into assets for the knowledge revolution while safeguarding IP.

Closer to Alignment 🤝🏼

Executives should prioritize cross-functional teams to align on AI integrations.

Conduct workshops blending IT, operations, and domain experts for shared understanding. This fosters buy-in and accelerates adoption.

Emphasize speed in change management. Use phased training to build confidence.

Alignment ensures humans and systems work in harmony. It mitigates risks like data leaks through collaborative guardrails.

Balanced & Insightful ⚖️

While AI promises scale, you need to balance it with human oversight to maximize value.

Assess LLM options against enterprise needs. Test for precision in complex scenarios. This prevents lock-in and enhances adaptability.

Train selectively. Focus on high-value conductors who configure systems. This yields 50-80% efficiency gains per our observations.

Acknowledge challenges like integration costs. Phased pilots demonstrate ROI, but be ready to scale fast.

Consider this matrix of Human Knowledge Versus AI Knowledge around Systems:

Role | Experts | AI Systems | Operators |

|---|---|---|---|

Skills & Focus | Deep domain insight; curate and refine data. | Ingest, tag, and summarize data; enforce guardrails. | Use configured systems, monitor outputs, and ensure precision. |

Responsibilities | Identify key data; set sharing controls. | Generate context-aware responses. | Maintain daily alignment between humans and machines. |

Jamie Thompson

I believe 2026 will be a defining year for enterprises that empower the intersection of their best human assets to aid and assist configuration of AI knowledge tools. Then, deploy AI systems that scale the output of super high quality information, decision support data, and experiential programs.

If I were a CEO of a Fortune 500 company, I’d be looking at how to scale smart AI systems, how to up-skill everyone and how to eliminate the laggards that are not onboard with AI-powered systems. Everyone's job is getting redefined and everyone needs to adapt to this knowledge revolution.

In 2026, enterprises have the chance to hit new growth metrics, improve customer satisfaction, reduce costs, and scale their missions to new heights.

Need Expert Guidance?

Book a focused 1-hour strategy session with Jamie.

✅ Evaluate current architecture and readiness

✅ Identify quick wins and hidden risks

✅ Get tailored, actionable next steps

👇🏼 Book a Strategy Call

Today’s Spotify Playlist 🎵

Maybe the title of this edition gives it away; I’m listening to the Boss today. I frequently find a song that I like to keep on repeat in the background and I’ll listen to it over and over all day. So, in the spirit of sharing the background beats in my head, here’s the playlist.

Short and sweet.

Born to run.

Have a great weekend everyone.

🎺🎧 Note: Web Edition Only